Photographers know that decreasing the aperture size on their camera will produce an image with a larger depth of field. Although it’s possible to take a great picture without understanding why this is true, it doesn’t hurt to know! All we need to figure it out is a little bit of geometry and physics, plus a little knowledge about how a camera works. This is a really fascinating topic for me; it shows how a single topic can have many different levels of understanding.

The General Plan

I’m a physicist, so I’m going to approach this problem like one. My instincts tell me to first think about an utterly unrealistic situation, and then see how it has to be modified to apply in the real world. As you’ll see, it’s a pretty effective strategy. So we’ll begin by thinking about a perfect lens projecting an image of a single point onto a sensor. Before we can understand why aperture size affects depth of field, we’ll have to first understand what depth of field is; and before we can understand what depth of field is, we’ll have to first understand what focus is; and before we can understand what focus is, we’ll have to understand how a camera works and how we can describe its operations using simple pictures. This might take a while! Trust me though, it’s worth it. By the way: thinking about a camera requires you to have very clear mental pictures. There’s going to be lots of pictures in this post to help you out. I don’t want to be ambiguous in any way.

Idealized Apparatus

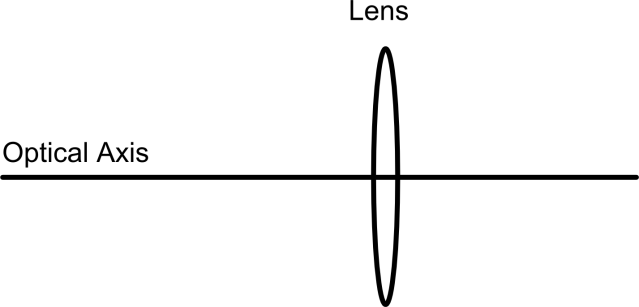

The first thing we’ll do is plunk down our lens. We’ll imagine that there’s a line running through the center of the lens. This is called the optical axis, and it helps us to define a reference point for the whole rest of our analysis. Distances will be measured along the optical axis, and heights will be measured perpendicular to it. Here’s a picture:

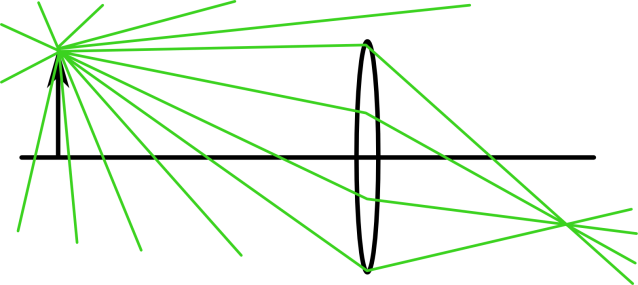

Now we’ll put our object somewhere. Like I said before, we’re just going to consider a single point for right now. A true point has no size; it’s infinitely small. This is obviously impossible to draw. People usually get around this by drawing an arrow that points to the point. It’s important to remember that the arrow is not the object; only the very tip of the arrow is the object.

This object emits light in all directions. We symbolize light in our drawings using lines. (In this case, green ones.) But here we are faced with another impossibility; we can’t draw all of the light rays. So we just draw some. Many light rays just continue forever in straight lines, but some get bent by the lens. Note that all the light rays going through the lens eventually intersect at the same spot. This is what lenses do! (Actually, this is what perfect lenses do. We’ll come back to this point later.)

We’re talking about a camera, so we don’t really care what happens to light rays that don’t go through the lens. This allows us to greatly simplify the picture by ignoring many of the light rays. In fact, we can get away with just drawing two of them! These two rays are important because they intersect with the outer edges of our lens.

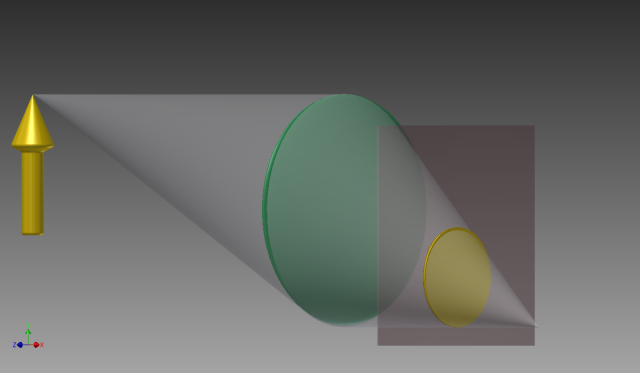

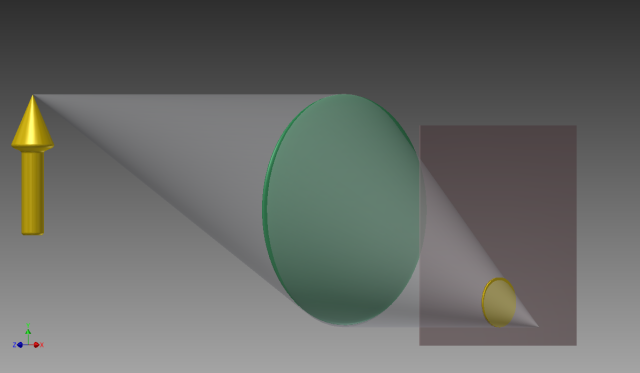

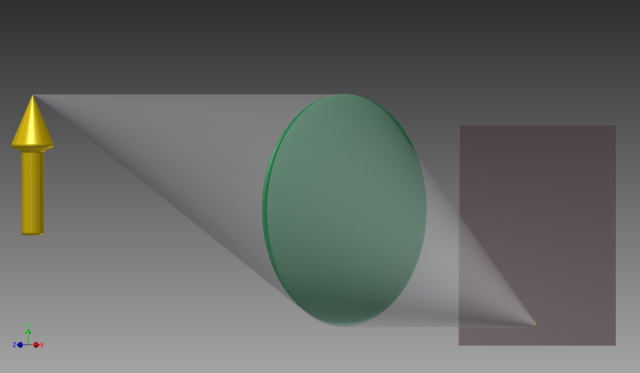

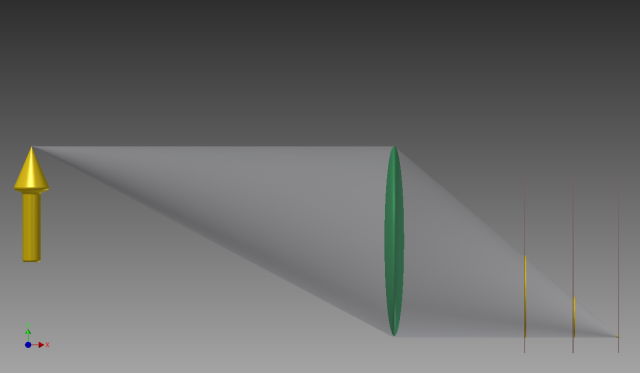

A camera is more than just a lens, though. We also need a sensor. A sensor is just some kind of flat thing that measures light. In a film camera, it’s the film, and in a digital camera, it’s an array of photodiodes. (We often call the photodiodes pixels.) An important thing to notice is that the sensor will measure different things depending on where we put it. If we place it right where the bent light rays intersect, it will measure a single point, but if we place it somewhere else, it will record a circle instead. Here are some cool 3D pictures that should explain why. The first shows the same image as the previous one, except that it’s in 3D and has been rotated a little bit. Notice that instead of drawing two rays that hit the top and bottom of the lens, I’ve drawn a bunch of rays that go all around the outside of the lens. They form a cone in 3D space instead of the triangle they formed in 2D space. (Elsewhere in this post, I’ll refer to the bent light rays as the bent light ray cone.) The next three pictures show a sensor intercepting the light rays at various spots. Two of them form circles, but one forms a single point. Finally, the last picture shows a side view of the three sensor positions so you can compare it to the 2D pictures I previously showed.

Here’s a very important thing to keep in mind: each of the 2D images above is really a side view of a 3D situation.

What is Focus?

Now that we understand what’s going on inside a camera, we are in a position to talk about focus. We can define in focus to be a situation where our sensor is positioned at the intersection of all the light rays. In this case, the sensor will only detect a single point; that’s great, because our object is just a single point! In this situation, the sensor measures a faithful representation of the object. If the sensor is placed anywhere else, it will measure a circle. This is called out of focus. The circle itself is called the circle of confusion. (I really like this name because it adds some drama to the technical description of photography. Lest you think I’m making this up, here’s the wikipedia article about it.) The circle of confusion results in a blurry image. So when you focus your camera, you’re really changing the distance between your lens and sensor with the hope that you’ll get it to the magic spot where the circle of confusion becomes a single point.

What is Depth of Field?

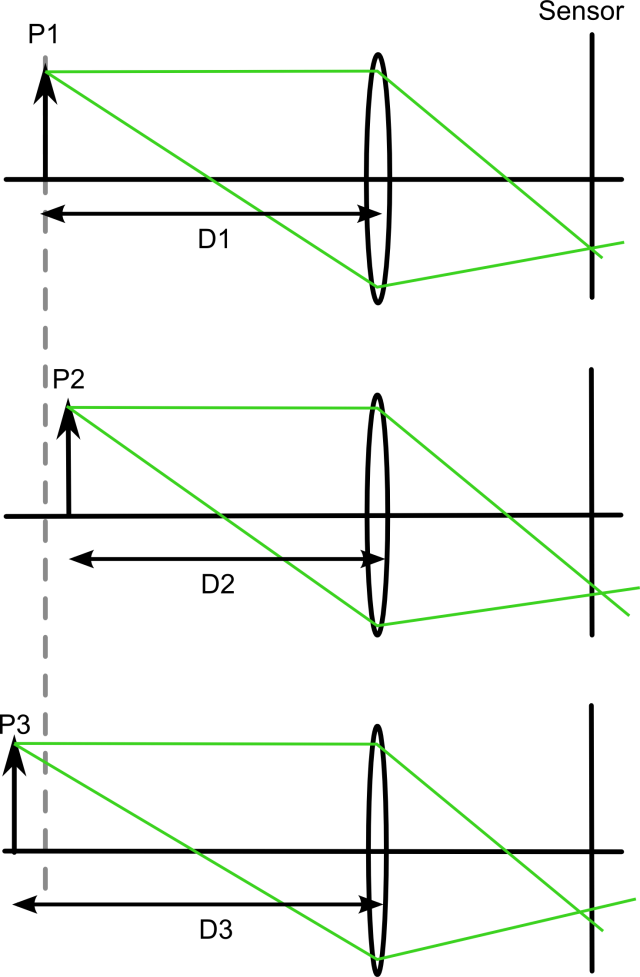

Real objects take up nonzero amounts of space, so our previous discussion about points is not really applicable to a real-life situation. However, we can think of a real object as a collection of many different points. As a first step, let’s consider just three points. Let’s suppose that we’ve focused our camera on a certain point (P1) that is a distance D1 away from the lens. P1 has some neighbors: P2 (at a distance D2) and P3 (at a distance D3). P2 is closer to the lens, while P3 is further away. As we can see from the picture below, P2 and P3 are are out of focus. This points out a serious problem with our definition of focus: only points that are a specific distance (D1 in this case) away from the lens can be in focus. If we stick to this definition, there is no way that an extended object could ever be in focus! It seems that our idealized situation is becoming inadequate.

All we need to do, though, is to slightly modify our definition of focus. Instead of defining focus to be the case where a point object produces a point image on the sensor, we can relax our standards. We can define approximate focus to be the case where a point object produces a circle of confusion that is small enough. (This is not a technical term! I made it up.) So what does small enough mean? Well, there is are two versions: the physical small enough, and the physiological small enough. The physical small enough refers to the fact that any real-life camera sensor has finite resolution. For example, as mentioned before, a digital camera sensor consists of an array of pixels. These pixels are little squares that have finite size. (Say one square micrometer.) If the circle of confusion is smaller than one square micrometer, it doesn’t matter! The camera won’t be able to tell the difference between a point and a circle whose area is half a square micrometer. The physiological small enough is a bit more complicated and has to do with the angular resolution of your eye and how your brain processes visual information. It’s hard to explain, but if you try shrinking a blurry picture on your computer, I think you’ll see what I mean. If you make it small enough, it will eventually stop looking blurry. You can also try looking at a picture from very close up and from farther away. It will look better from far away! (Think of Cameron at the art museum in Ferris Bueller’s Day Off.)

Now that we’ve modified our definition of focus, we see that there is now a range of distances where points are in approximate focus. I have chosen D2 and D3 in the previous picture so that P2 and P3 are at the limits of approximate focus. So the range of approximate focus extends from D2 to D3. I call this range the focus range. (This is not standard terminology either.)

We can now define depth of field: depth of field is just the size of the focus range! Depth of field has huge impacts on photography. I’ll just mention one aspect of it. When a photographer chooses to use a small depth of field, he or she can draw attention to certain aspects of a scene. The photographer can choose a small range where things are in focus, while the rest of the image will be blurry. (This actually has a name: bokeh.) Alternatively, the photographer can choose a large depth of field to emphasize all aspects of a scene. I think this technique is particularly evident in landscape photography.

So that was just the prelude to the main point of this article. We’re finally ready to talk about the effect of the aperture size on the depth of field! Before we continue any further, I should explain what an aperture is. An aperture is basically a hole that you place in front of your lens. You can adjust its size, which essentially shrinks or increases the size of your lens. It turns out that there are actually two reasons for why a small aperture increases depth of field.

Reason 1: Geometry

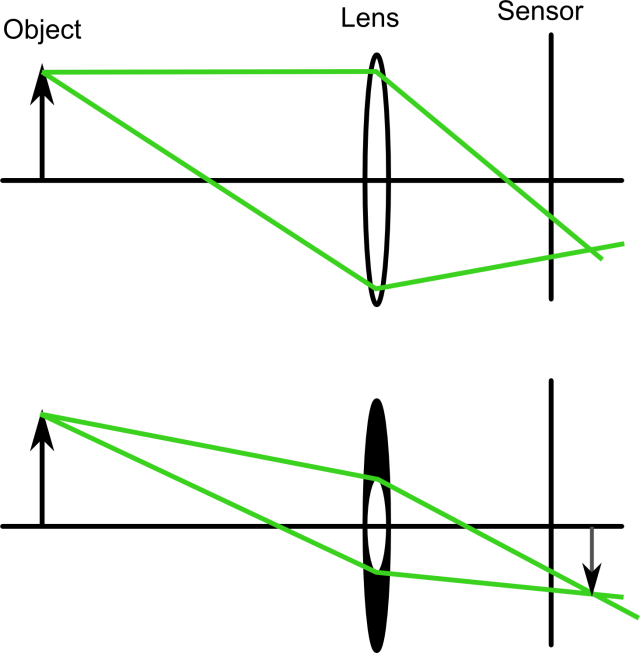

The first is entirely based on geometry. It has to do with the fact that shrinking the aperture makes the “bent light cone” get narrower, which in turn shrinks the circle of confusion. This allows for a wider focus range and hence a larger depth of field. The picture below will help you visualize this. The top shows the lens with the widest possible aperture, while the bottom shows the lens with a much smaller aperture.

Reason 2: Lens Construction

The other reason has to do with the fact that real-world lenses are not perfect. Back in the beginning of this post, I made the assumption that “all the light rays going through the lens eventually intersect at the same spot.” This is actually only true for a certain kind of lens, called a “parabolic lens.” The trouble with parabolic lenses is that they’re very hard to make. Instead, most cameras use spherical lenses. Spherical lenses introduce so-called “spherical aberration,” which is the effect that light hitting the outside of the lens is actually focused to a different spot than light hitting the inside of the lens. This effect is shown in the picture below. The top shows a perfect lens, while the bottom shows a more realistic one. The net effect is that spherical aberration increases the size of the circle of confusion. In fact, it makes it impossible for the sensor to ever measure a single point. By blocking the outside light with an aperture, you can consequently shrink the circle and increase the depth of field.

Further Thoughts

There are a few things I glossed over or didn’t talk about in the post, which I encourage you to think about. For example:

- Is the circle of confusion actually a circle? (Not always. When is it a circle, and what is it when it’s not a circle? Hint: this wikipedia page.)

- Which small enough (physical or physiological) is usually more important in determining depth of field?

- Is the concept of physiological small enough only important in photography? (Hint: pointilism.

- Why does a parabola focus all incoming rays to the same point? (Here’s the answer. Note that strictly speaking, a parabolic mirror only focuses all rays that are parallel to its optical axis…)

- Which reason (geometry or spherical aberration) is more important in increasing depth of field? (It depends, but on what?)

- Is there a way to differentiate between the two effects? (Think about what happens at the tip of the bent light cone.)

- Are there other lens defects besides spherical aberration? (Yes, check out great site for a few others.)

- Is aperture size the only way to reduce spherical aberration? Hint: this wikipedia article

- Why is it impossible to get a true bokeh effect with a point-and-shoot camera?

- Does depth of field depend on the size of the object you’re photographing? (No, but why?)

- Does depth of field depend on the focal length of the lens you’re using? (Yes, but how?)

- Does depth of field depend on how far away your object is? (Yes, but how?)

- Is the focus range symmetric around the point of best focus? (No, but why?)

- Will shrinking the aperture always make your image in better focus? (No, but why? Hint: think about very small apertures…)

As an aside, if you want to know how I determined the location of the light ray intersection in the my pictures, check out the thin lens equation and geometric optics.

What a great description! The methodical way you presented this helped me understand things about optics that have always been a little fuzzy to me (intentional play on words!) Thanks!

Fantastic. Well done. Much appreciated. Easy to understand. This is something I’ve wondered about for years. Now I know!

Thanks a lot for your explanation. I recently began studying photography and this really helps me understand. I will post a link to this post. Bye!

Thanks for the link, I’m glad you liked my post!

[…] amount of the picture that is sharp and vice versa. I found a brief and very simple explanation (https://physicssoup.wordpress.com/2012/05/18/why-does-a-small-aperture-increase-depth-of-field/). It helped me a lot to understand why this […]

Hi

My son is teaching photography to year 10 students in Melbourne in July-August. He is an art teacher but knows very little about photography. As a fast learning photographer, in my recent retirement, I am writing a course for him. Your explanation of Depth of Field is the best I have seen. Can I have permission to use some of your diagrams in the notes I am providing for him. They will not be sold and I can give attribution to you. I would need details to do this. Kind Regards Barry Kearney bdkearney@gmail.com

Hi Barry,

I’m glad you found my post to be useful! Thanks for asking me about using my diagrams. Sure, you can use them. Just attribute them to my name (Ed Lochocki) and this blog (https://physicssoup.wordpress.com).

-Ed

This is a very clear explanation and very easy to follow. Could you give explanations for why focal length and distance also affect depth of field, or should I be able to derive those from the information here? Thanks.

Thanks for the great question and sorry for my late response! The short answer is that the ideas presented here are not quite enough to understand how object distance and focal length affect depth of field. For a longer answer, please see my new post: https://physicssoup.wordpress.com/2015/04/26/how-do-object-distance-and-focal-length-affect-depth-of-field/

Hi Ed Lochocki,

What an amazing explanation and great article!

I am beginner of DSLR photography and always wondered how aperture controls the DOF of the image. This makes it crystal clear and logical to understand to an extent that I can never forget the relationship.

Good Stuff! Keep it up! Thanks a lot,

Dude. Epic explaination man. Made sense from start to finish 🙂 Well done

wow. physics behind very basics of photography so well explained. thanks a mill!

Excellent explanation. Thanks for taking time to create this.

subbed!

I teach sensor cleaning and would love to be able to explain why dust particles appear on images at smaller aperture opening but not on larger ones. Also why does it make a difference when the filter in front of the sensor which has the dust particle on it is farther or closer to the actual sensor.

The best explanation of how aperture affects DoF that I have ever read! Thanks.

Very useful article. And wonderfully illustrative figures. Thanks!

That was great…now could you please explain to me what chromosomes are?

(I’m only half kidding)

Thanks, but don’t expect any chromosome posts… that’s a bit outside of my wheelhouse!

Circle of confusion, no more! Thank you so much for your explanation of that term!!

[…] the smaller your aperture is, the more will be in focus. Having more in focus works in photography situations such as city scapes and landscapes, where […]

I had a few questions. (1) Suppose you have an aperture with a small opening and one with a larger opening. Wouldn’t you lose more information (i.e., light reflecting off the object at certain angles) for the aperture with the smaller opening – because you’d essentially be blocking the light signals that reflect at sharper angles, thus reducing your field of view? (2) Also, how do the distances of objects play a role in digital processing; for example, if I were to take a picture of myself and of an air balloon far in the background, would the image result in the air balloon essentially being some time units behind, since the light from the balloon takes longer to reach the camera; or is this only significant at very very large distances? Thanks!!!

Thanks for thinking about this post and asking questions!

(1) That’s a really interesting question and I’ve never thought about it before! It’s definitely true that shrinking your aperture could cause your camera to miss a narrow beam of light. However, this would not really be a change to your depth of field, in the same way that pointing your camera in a different direction (to collect light from different objects) would not change the depth of field. I also don’t think it’s fair to say that information is lost. I think there is simply a change in the information contained in the picture, since the “lost” light rays will be replaced by other light rays from something else. Now that you brought this up, I’m wondering if I could engineer this type of situation and give it a test. My guess is that this effect is rarely important, but it’s still interesting.

(2) Even under ideal conditions, the presence of Earth’s atmosphere makes it difficult to see objects more than 100 miles away (according to the Wikipedia page for “visibility”). A light ray would require around 500 microseconds to travel this distance. As a comparison, a typical human blink lasts for 100-400 milliseconds. So even at the extreme distance of 100 miles, the time lag would not be very significant. Of course, it would eventually become important at very large distances. As you probably know, sunlight takes 8 minutes to reach Earth, and the cosmic microwave background has been traveling for over 13 billion years before arriving at our telescopes.

Thanks for the wonderful explanation. I like how you mentioned that information isn’t lost, but changed, so now I’m thinking that rather than having the information being lost due to a loss of a beam from a narrower aperture, that information is rather still existing due to the vast number of other beams that have found their way to the lens, and only a small piece of information from those beams have been lost.

I’d be interested to know how you’d test the loss of information due to a narrower aperture (if that’s the right question to test); with my limited knowledge of how light interacts with matter, I can only think of having a closed system/box that is absent of light, where one side is the camera (encased in non-reflective material with an adjustable aperture) and on the other side is a sheet whose face is parallel to that of the lens; a vertical “line” of light can then pass through from right-to-left or vice versa. So no light will reflect from the surroundings, and instead, you’d have the light only reflecting off the object. Thus, when narrowing the aperture, depending on how the light reflects off the object (e.g., straight back vs spread out like a cone), then you might actually see a loss in a significant amount of information/light that never reached the narrowed aperture lens due to the manner in which light reflects off the object and towards the lens, while making sure there is no other light being introduced from anywhere else.

Hi, first of all I found your explanation brilliant, it really helped me out to understand depth of field!

Regarding to Sameed Jamil’s question, I think it was about the change to field of view and not to field of depth. I am also interested if changing the aperture would affect the field of view ever so slightly?

What an outstanding attempt to clarify things….many thanks

[…] you’re into physics, have a look at this explanation why a small aperture increases the depth of […]

[…] you’re into physics, have a look at this explanation why a small aperture increases the depth of […]